Tuesday, December 22, 2009

Predictions for 2010: #3 – Open Source Principles Become Mainstream

More surely in 2010, and then for years to come, we will see the principles of open source - transparency, community participation and collaboration - become entrenched in the mainstream tech sector. Beyond just the rhetoric of “community building”, the advantages of truly being part of a more vibrant, organic technology development and utilization process will prove far too important to all software and technology companies. The proven benefits will drive imitation.

So, embracing the principles of open source will become expected in 2010 – and appear in a number of forms. Watch for more open APIs from companies of every stripe in order to extend the reach of their products. Even these open source newbies will use community development models to prompt a collaborative dialog and gain much greater familiarity with those using and extending their software.

Finally and specifically, two major developments will unravel during 2010 that will illustrate the reach of open source principles: the rise of Android-based phones and Google Wave. I wrote about Google Wave in 2009 and stand by my praise. This open set of web services that can synthesize the variety of social and collaborative tools is a shining example of what openness can bring to bear. And, Android will genuinely give Apple’s closed iPhone and Research In Motion’s closed BlackBerry platform some real competition, driving volumes that will earn its place in the mobile device market and help drive the principles of open source into the mainstream at the same time.

I’m sure you have more examples for open source principles to impact the mainstream and I’d like to see your comments.

Brian Gentile

Chief Executive Officer

Jaspersoft

Monday, December 21, 2009

Predictions for 2010: #2 – Collaborative BI Will Finally Arrive - - and Stick Around

Google Wave and other web-based technologies will give way to a new level of collaboration across analysis, reports and any variety of other enterprise information that will inform decisions better and faster. This will translate into the realization of “enterprise social computing.” See, for instance, Dion Hinchcliffe’s articles and insight on the enterprise social computing trend.

In 2010, we should see collaborative BI as a strategic, fundamental piece of the BI puzzle that helps organizations get even more return on their software investment. How will you put collaboration and BI to work in 2010?

Brian Gentile

Chief Executive Officer

Jaspersoft

Friday, December 18, 2009

Predictions for 2010: #1 - The CIO's Vendor Perspective is Forever Altered

CIOs at companies large and small can no longer spend seven figures on software on the hope that it will create positive ROI down the road. The economy has demanded that CIOs rethink their approach to software acquisition and investments and are looking more than ever before to subscription pricing, open source alternatives and participation in the development of the software they use.

Software development and delivery models such as open source, SaaS, cloud solutions and virtualization have all achieved a level of maturity that allows CIOs to depend on them and never look back.

Because of this, budgets will never reach the bloated levels seen prior to 2008. Projects going forward will require more controlled, cost-effective incremental milestones with greater control in the hands of IT managers.

And, because of this greater regimen and altered perspective of those vendors that supply IT, the CIO’s position will re-build credibility and re-earn its strategic seat at the table. I’ve talked with plenty of CIOs who share this belief and I’m eager to hear your comments and stories, too.

Brian Gentile

Chief Executive Officer

Jaspersoft

Thursday, December 17, 2009

A New Dawn, A New Day, A New Decade for IT

In the Information Technology market, there are a number of companies doing the same: borrowing, cashing out or just holding tight. But, I believe we will start to see a real recovery in 2010 and the IT industry will then forever work within a new economic reality.

The ultimate result on the IT market will be positive. While IT spending forecasts show some growth in the year ahead, they will remain lower than pre-recession levels. Gartner, for instance, most recently predicted 3.3% year-over-year IT spending growth for 2010. This frugal spending environment is surely prompting CIOs to think differently about the way they acquire and deploy software. Times like these are also when we see the greatest innovations in technology, because we’re all forced to work harder and produce better products, technologies and services.

I’ve given this some thought as I’ve worked with my team at Jaspersoft to plan for the next year and wanted to share my top three predictions for 2010. I will offer one new prediction a day, for the next three business days, before going on a short blog leave until the New Year. In fact, I’m saving the boldest prediction for the first week of the New Year, when I’ll share with you some of my picks for open source companies to watch in the coming year.

So, tune in right here to get your daily dose (for the next three days). And as always, your comments are encouraged.

Brian Gentile

Chief Executive Officer

Jaspersoft

Sunday, November 29, 2009

My Letter to the EU: Stop the Delay of Oracle's Acquisition of Sun

---------------------------------------------------------------------------------------------------------

Attention: Neelie Kroes

Commissioner for Competition

European Union

I’m writing to urge you to stop the delay of Oracle’s acquisition of Sun Microsystems.

With the proliferation of open source software over the last decade, lower barriers to entry have naturally increased healthy competition in the enterprise software market. As a result, the software and technology market has grown far too dynamic to allow one acquisition to stifle competition. In this new marketplace, nearly anyone can create software with little upfront cost and then compete with even the most entrenched players.

Consider the company I lead, Jaspersoft, as just one example of hundreds where a company was founded based on the need to create better software than what was available from existing providers. We’ve created the most widely used business intelligence software in the world by using the open source software development model. Our success – as well as others – is at least partially due to MySQL and the impact it has had on the expansion of the database market using this same model. The market will continue to benefit when MySQL is once again able to fully compete.

With new software development models succeeding, innovation will continue unabated. And, it will happen in the database market. Ingres, PostgreSQL and MariaDB, for example, will only grow stronger and prove to be more competitive. And, new database products will emerge that supplement existing competition.

Furthermore, done properly, Oracle’s acquisition of Sun should serve the market, community and customers even better. The company will be put in a position where it can play an important leadership role in helping its global peers more surely understand the open source model of shared development and community collaboration in addressing rapidly changing customer requirements.

Lastly, I urge you to come to a conclusion on this matter before January. With its delay, the EU is neither hastening more competition nor furthering Oracle's business. In this case, government should step aside and let the market move on.

Sincerely,

Brian C. Gentile

Chief Executive Officer

Thursday, November 5, 2009

Government embrace of open source: the times they are a changin’

Substantial advancements are underway proving that open source software, at every level of the stack, is becoming mainstream in the U.S. Federal Government. Speaking at this week’s GOSCON Conference in Washington, the acting DoD CIO, David Wennergren, spoke about the new departmental understanding that open source software which manifests in commercial form is legitimate and should be considered for use.

I’m religious about efficiency and effectiveness in government, believing that strong democracies are built and reinforced through successful capitalism, which (in turn) is hindered when government is allowed to become bloated and expensive. In this sense, the government’s use of open source is necessary.

Further, I’ve previously emphasized how the government’s use of open source can act as a lighthouse of credibility that influences not only all of the public sector but spills into the private sector as well. I highlighted this while praising the choice of Vivek Kundra as the new Federal CIO and now have more first-hand evidence as I’ve spent even more time with government-focused partners and customers.

At Jaspersoft, we’re betting with our time and energy as we’ve enthusiastically joined both Open Source for America and, most recently, the Open Source Software Institute. Our belief is that profound change will come to the public sector partially due to new efficiency brought by technology.

I’m proud that Jaspersoft is at the heart of a new Business Process Management-driven BI application, developed by HandySoft, that delivers sophisticated business intelligence for the Nuclear Regulatory Commission. This is a system that saves time and delivers vital intelligence information to a large audience simply and powerfully, winning Gartner’s 2009 Innovation Award in the process. NRC adds to our already impressive roster of Government customers like NASA, the DoD, and NIH, giving us all hope that fewer tax dollars are being spent to deliver superior analysis and insight, and ultimately better decisions.

I invite you to follow Jaspersoft’s progress in helping to create more efficient government, at our new government-focused web page. This is our part in the return to a government of the people, by the people, and for the people . . . also known as “community”, which is what open source is all about.

Brian Gentile

Chief Executive Officer

Jaspersoft

Monday, October 26, 2009

The White House Chooses Open Source

Drupal for Content Management

Great news announced this week from our colleagues at Acquia, the commercial open source backers of the Drupal web content management tool. The White House’s web site, www.whitehouse.gov, has relaunched using Drupal. On his blog, Dries Buytaert, CTO of Acquia and original creator of Drupal, describes this news.

I have passionately asserted in a past post that the U.S. Government has a long way to go in catching other western governments in its conviction to use new technologies, especially open source software, to the benefit of every citizen (the minimum benefit of which is better using precious tax dollars). This news underscores the technological platform being created by Vivek Kundra, the new Federal Government CIO and his even newer counterpart, CTO Aneesh Chopra.

I have long admired Drupal as a project and as a tool set. The teams I’ve lead have built several substantial, commercial web sites using this tool. Most recently, Jaspersoft’s commercial web site has been re-built using Drupal and coming soon, our community web site will use Drupal for all Homepage-like content, making our management of these two sites simpler and less costly.

Building web sites using an open source content management system makes just as much sense as using any number of other widely available, highly-regarded open source software and tools, such as Linux, Apache/Tomcat, PHP, and MySQL. Just as Dries explains, the choice of Drupal amplifies the features, security, and cost effectiveness of not only this world-class content management tool, but of open source software in general.

Fortunately, I can report similar increased interest and use of Jaspersoft’s open source business intelligence platform throughout the U.S. Federal Government. In the past, I’ve written about our work with the NIH, DoD, and NASA/JPL. So much has happened since the launch of Open Source for America and our open letter to President Obama. Soon, I’ll be adding to this roster of Federal Government customers (of Jaspersoft's) with more prominent projects in high-profile agencies. Stand ready as Government 2.0 is readying for launch.

My congratulations to the Obama Administration and the White House team for taking an important step forward.

Brian Gentile

Chief Executive Officer

Jaspersoft

Wednesday, September 23, 2009

The Open Source Renaissance

Note: This blog post also appears as a guest post on the blog at my alma mater, Eller College of Management at the University of Arizona. But I also wanted to share it with readers here.

It occurred to me recently that the open source movement is really nothing less than a renaissance. Perhaps that sounds grandiose, but stay with me.

If you think about it, for a few hundred years, some of the most significant advancements by mankind have come from, and are maintained in, proprietary (closed source) methodologies.

Take, for example, U.S. patent and copyright protection laws and policies. They reinforce proprietary, “closed source” rights and policies. As a result of this system, many substantial U.S. companies have formed around breakthrough ideas, but incentives are in place for those companies to guard and protect their intellectual property, even if others outside the company could extend or advance it more rapidly.

Now, to be clear, patent and copyright protection is necessary because it properly encourages the origination of ideas through the notion of ownership. But, too few people consider the upside of allowing others to share in the use of their patents and copyrights, because they think such distribution will dilute their value - when, in fact, sharing can substantially enhance the value. Fundamentally, “open source” is about the sharing of ideas big and small and the modern renaissance represents newfound understanding that sharing creates new value.

In many areas of science, the sharing of ideas (even patents and copyrights) has long been commonplace. The world’s best and brightest physicists, astronomers, geologists, and medical researchers share their discoveries every day. Without that sharing, the advancement of their ideas would be limited to just what they themselves could conjure. By sharing their ideas through published papers, symposiums, and so on, they open up many possibilities for improvements and applications that the originator would have never considered. Of course, the internet has provided an incredible communication platform for all those who wish to collaborate freely and avidly and is, arguably, the foundation for this renaissance.

That’s why it’s ironic that one of the laggard scientific disciplines to embrace open source is computer science. For the past 40 years, for example, incentives have been strong for a company to originate an idea for great software, immediately file a patent and/or register to copyright it, and then guard it religiously. No one would have thought that exposing the inner-workings of a complex and valuable software system so that others might both understand and extend it would be beneficial. Today, however, there are countless examples where openness pays off in many ways. So, why has computer science and software lagged in the open source renaissance?

That computer science is an open source laggard is ironic because the barriers to entry in the software industry are relatively low, compared to other sciences. One might think that low entry barriers would reduce the risk to and promote the sharing of ideas. But, instead, software developers (and companies) have spent most of the last 40 years erecting other barriers, based on intellectual capital and copyright ownership - which is perplexing because it so limits the advancement of the software product. But, such behavior does fit within the historical understanding of business building (i.e., protecting land, labor and capital).

Another relative laggard area - and an interesting comparison - is pharmaceuticals and drug discovery. When I talk with colleagues about this barrier-irony phenomenon, this is the most common other science cited (i.e., another science discipline that has preferred not to share). But, in drug discovery the incentives not to share are substantial because the need to recover the enormous research costs through the ownership of blockbuster drugs is extremely high. In fact, because the barriers to enter the pharmaceuticals industry are quite high, one might think that would promote openness and the sharing of ideas, given that few others would genuinely be able to exploit them. But, once again, the drive to create a business using historically consistent methods has limited the pharmaceuticals industry to closed practices.

So, returning to computer science and software, maybe the reasons for not sharing are based on the complexity of collaboration? That is, it’s hard to figure out someone else’s software code, unless it’s been written with sharing fundamentally in mind. Or maybe there’s a sense that software is art, and I want to protect my creative work - more like poetry than DNA mapping.

Either way, the renaissance is coming for the software industry. Software will advance and solve new problems more quickly through openness and sharing. In this sense, computer science has much to learn from the other areas of science where open collaboration has been so successful for so long.

Fortunately, the world of software is agile and adept. According to research by Amit Deshpande and Dirk Riehle at SAP Research Labs, during the past five years the number of open source software projects and the number of lines of open source software code have increased exponentially. The principles that this new breed of open source software have forged are already leaving an indelible mark on the industry. Soon, its proponents believe, all software companies will embrace these fundamental open source principles: collaboration, transparency and participation. The course of this renaissance will be our guide.

I would be interested in your feedback on these ideas because the open source renaissance is well underway and I plan to be a model historian.

Brian Gentile

Chief Executive Officer

Jaspersoft

Wednesday, September 16, 2009

JasperReports v3.6: More Advanced Visualization for More Advanced Reporting Uses

I don’t often discuss Jaspersoft’s specific products in this blog, preferring to offer ideas and insights on the trends that are shaping the world of business intelligence. Today, though, I want to highlight the fact that we’ve launched a substantial new version of our flagship reporting tool that raises the bar in the world of reporting and advanced visualization: JasperReports Professional v3.6.

This new reporting engine, library and professional development environment (which includes iReport v3.6) includes new Flash-based geo-visualization and advanced charting capabilities. In fact, we’re including hundreds of advanced, 3-D graphs, maps and widgets to dramatically improve our customer’s data visualization experience. And we’ve tightly integrated this technology with the JasperReports engine and the iReport development environment to make a great total developer experience.

We’re very excited to deliver this advanced visualization technology for this important audience and market. As I commonly explain, our strategy is to deliver simple, powerful BI tools that are used by and considered useful for the widest possible audience. This release brings us closer to that goal.

I hope all of those developers who love JasperReports and iReport will check out our new product.

Brian Gentile

Chief Executive Officer

Jaspersoft

Thursday, August 27, 2009

Part IV: Next-Generation Web Applications Embrace Collaboration

I saved the topic of Collaboration, key to next-generation web application design, for my fourth and final post in this series for good reason. Social networking, wikis, instant messaging, and micro-blogging are now central to so much of what we do as both consumers and businesspeople that their effects on enterprise applications are already pronounced. Lyndsay Wise recently emphasized the effects of social media and open source BI in an outstanding article. My three previous posts described required building blocks for next-generation web applications. Collaboration is important enough to take up at least one floor of this new building.

Recently, my worldview changed with regard to software-based collaboration techniques. As a long-time business intelligence insider, of course I thought about the world from the BI tool outward. From this BI-centric view, collaboration features are added on to the BI platform to enable mark-up and annotation, workflow routing, and other basic collaboration features.

Then I saw Google Wave.

I’ve written about this before, but am saving the punchline for this post: The proper way to think about collaboration within a software system is to put the end-user in the center of the universe.

From this view, a business day is all about collaboration. So, the various tools that I use to do my job (CRM information, spreadsheet data, any variety of documents, and business intelligence-driven information) should be consistently collaborative. In other words, collaboration is the first-order matter to solve and other applications and tools should work within that collaboration paradigm. This is why email has emerged as and remains the most dominant collaboration platform. Hmmm. . . . that certainly changes my BI-centric view and makes an open source collaboration platform incredibly important.

And, while we are working hard at Jaspersoft to ensure the most important collaboration features will be delivered within our BI platform, the bigger picture says we’d better ensure our software works well within the most common collaboration platforms. Such consistency will make the end-user’s ability to share and collaborate significantly easier.

Setting aside Google Wave, I set out to find other, powerful examples of open source collaboration platforms that can be used today. I was surprised that many claim to be “collaboration platforms,” asserting that they encompass a more complete set of technical services required to enable those who need to work together toward common goals to do so much more effectively.

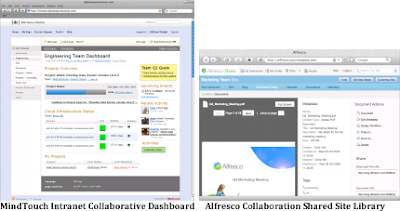

I am impressed with several open source collaboration platforms worth pointing to here because they can enable many other software applications or systems to work within them, thus accomplishing the end-user-centric view I emphasized earlier. For example, the Open Solutions Alliance (OSA) has created a “Common Customer View” demonstration to emphasize a nicely integrated set of open source products in a collaborative environment (including Jaspersoft and JBoss Portal). And, below are sample screen shots from Alfresco and MindTouch. Both of these companies position their products as collaboration platforms. While they differ in their approach and emphasis, both seem incredibly useful in the right solution scenarios.

MindTouch offers a well-orchestrated and flexible set of web services that enable a wide variety of collaborative applications to be developed on top of it. And, Alfresco focuses more on enterprise document-based collaboration.

These examples show that I’m not the only guy in open source software to embrace this new user-centric worldview.

I am both optimistic and energized that this new generation of collaboration platforms will help transform today’s difficult, non-collaborative world of typical enterprise applications. Importantly, collaboration combined with ubiquitous access, elegant presentation and end-user customization enables a web application that powers the next-generation workforce to new levels of capability.

Brian Gentile

Chief Executive Officer

Jaspersoft

Friday, July 31, 2009

Part III: Next-Generation Web Applications Are All About Customization

One of the sure hallmarks of successful web-based applications is providing the user with ability to customize the application experience to suit his or her specific interests and needs. Such customization not only tailors the system to the unique use-case of the user, but, done properly, it actually extends the capabilities of the application. There are three primary categories of customization, each of which provides specific benefit for the user.

1. Personalization – settings or simple adjustments that tailor the application’s presentation to someone’s personal preference. The more visual and elaborate, the better.

2. Local Integration – the ability to include data or information from a desktop computer or local system (server) within the web application. Can range from simple (upload my photo) to sophisticated (dynamically embed my MS Excel chart within an iFrame and refresh every 60 seconds).

3. Mash-Ups – combining web-based data and information within a single HTML container, purely through presentation-level techniques (URL addressability, drag-and-drop, etc.) and with the goal of creating a new web service as a result. Ideally, a web application supports mash-ups in both directions: embedding live data objects from other sources and placing live data objects within other HTML containers.

Of course, the consumer web provides many examples of customization - myYahoo and iGoogle are among the most straightforward and popular. And the social networking sites (MySpace and FaceBook especially) are founded on this trait of customization. In web-based enterprise software, we have fewer powerful examples, but they exist. Certainly Salesforce.com provides a respectable amount of customization, by granting user-defined ability to assemble pages for displaying reports, dashboards and even some control over interactive/input fields.

To illustrate two of the three categories of Customization listed above, I’ll again resort to my favorite web-based application, JasperServer v3.5. Below is a screen shot of a JasperServer dashboard that exhibits both local integration of data (JasperServer-managed and R-managed, where R is the popular statistical analysis tool) and mash-ups (integration of Google map, news feeds, Twitter feeds, etc.).

In this case, the JasperServer dashboard is displaying a broad set of data and information, some of which is managed by JasperServer but much of which is external and is simply being displayed here conveniently for the end-user. Technically, each data element is contained within its own iFrame and is managed separately. And, each data element generated by JasperServer can be embedded in another HTML container for use in another web application (with an intelligent, dynamic link maintained back to JasperServer). Additionally, each data element (regardless of whether it is internally- or externally-sourced) can have its attributes mapped to a global input control. So selecting "New York" in the input control could simultaneously filter a bar chart and re-orient the embedded Google map (or news feed) to that region.

Of the four major web application design points about which I’ve been writing, Customization is probably the most profound in its ability to transform an organization’s use of web applications. For this reason alone, every web application should embrace this fully. My next post will focus on Collaboration, the fourth and final web application design point.

Tuesday, July 21, 2009

Open Source for America: Good for the U.S., Good for the Globe

Building a more efficient government is everyone’s job.

This week, a broad and diverse coalition of technology industry leaders, academic institutions, associations and communities are coming together to form a unified voice that will promote and amplify the use of open source software within the U.S. Federal Government. Called Open Source for America, Jaspersoft is very pleased to be part of this coalition and I believe its existence is important for at least three reasons.

1. Helping the U.S. Federal Government to be more efficient makes sense for every citizen. Promoting the use of open source software as a means to do so is a no-brainer.

2. Many of the standards and methods put forth by the U.S. Federal Government influence the technology adoption plans in private enterprise. With its incredibly broad footprint and profile, the U.S. Government will be helping a big section of nearby industries adopt open source and become more efficient (as a result) as well.

3. More aggressive adoption and use of open source software will place the U.S. Government on par with the governments of other leading nations in its drive to use open source software for the benefit of its citizens. Taking a leadership position on this world stage is important for the United States, where much of the world’s open source software is collaboratively developed.

Each quarter, more of Jaspersoft’s community and commercial success is coming from the U.S. Federal Government. We’ve talked publicly about our work with NASA and the Jet Propulsion Laboratory, the various branches of the Department of Defense, and the National Institute of Health. These diverse organizations have found remarkably common reasons to choose open source business intelligence from Jaspersoft: our modern and flexible architecture, lowest possible cost of ownership and a substantial community on which they can rely for constant interaction. These are the same substantial advantages that every Government organization should be taking advantage of. And, as a taxpayer, I would say the sooner the better.

I’ve energetically endorsed an open source technology agenda during the course of the Presidential campaign and was among the first signatures on the Open Source “open letter” to President Obama shortly after he was sworn in, promoting that document in this blog and elsewhere. Open Source for America represents the next logical step forward in helping our Government demonstrate some of the most storied and valuable principles of open source: transparency and collaboration. Let us hear about your support for this coalition by following the OSA on Twitter and sending an email to me at bgentile@jaspersoft.com with your ideas for how the U.S. Government should use open source software to better build our democracy.

Friday, June 26, 2009

Part II: Next-Generation Web Applications Require Elegant Presentation

If you’re old enough to have used an IBM 3270 “green screen” or even MS-DOS at some point in your career, you know the exact opposite of elegant presentation. While enterprise software evolved from inconsistency and poor design through to client/server architectures and ultimately the web as a central design point, many things have changed, but one hasn’t: the end user cares immensely about elegant presentation. And, by “presentation” I mean the design, look and feel and the interaction model provided within the application.

Recently, I’ve been researching model Web 2.0 applications – looking for inspiration. I am positively impressed but still wanting a future full of even more capable and elegant, web-based products. As a resource, I’ve found Dion Hinchcliffe’s Web 2.0 Blog very helpful. He emphasizes that the web community gets smarter with every product release because it has become so organic, when he says:

“Not only do we have examples of great online applications and systems to point to and use for best practices, but the latest tools, frameworks, development platforms, APIs, widgets, and so on, which are largely developed today in the form of open source over the Internet, tend to accumulate many of these new best practices.”

Specifically, Hinchcliffe cites “clean, compelling application design” as among the most important attributes of a Web 2.0 application. For fun, some of the latest trends in web application design are published by Smashing Magazine. The primary point is that “attractive applications inherently attract new customers to try them and is a pre-requisite to good usability and user experience.”

I’ll provide an example of elegant presentation (below), taken once again from my favorite Web 2.0 application – JasperServer.

Our goal has always been simple, intuitive behavior, so the product doesn’t get in the way of the user exploring the data (which is the real star of the show in a BI application). While we (at Jaspersoft) can cite a wide number of things we plan to improve in our design and interaction model, we shouldn’t forget that our current product, based entirely on Web 2.0 techniques and technologies, makes use of many state-of-the-art features and is designed to deliver a great user experience.

I’ll use this screen shot again and others in my next post as I continue to discuss the requirements for successful next-generation web applications. Stay tuned for my personal favorite: “End-User Customization."

Thursday, June 11, 2009

Part I: Next-Generation Web Applications Require Ubiquitous Access

Delivering rich, interactive content to you, consistently, whether you’re using a desktop computer or wireless mobile phone is Google’s agenda. With great influence on the mobile device’s operating environment (á la Android), Google can more specifically control the information served to those devices. Ideally for Google, there would be precious few moments of your waking life when you’re not interacting with some Google service.

Fundamentally, anyone building a new web application should understand that constant and consistent access to the same systems and data, from every computing device, is a top design goal. More distributed workforces combined with more time working remotely and traveling, and the ever-expanding workday requires that knowledge workers today not only be in touch, but be online. If your application and data access experience is compromised because of the computing device, the application service wasn’t designed properly, period.

As example, I’ll use the web application I know best – JasperServer, which (shown below) is delivering access to an executive dashboard through two leading smart phones.

It doesn’t matter that the display device is a smart phone or PDA, because the executive dashboard looks and behaves similarly to its desktop counterpart. Why? Because the application relies on open standard, server-side technologies that operate uniformly within a full-featured web browser. And, it shouldn’t matter where that browser is running because even mobile web browsers are supporting advanced web technologies, like AJAX and Flash. So, designing and delivering for ubiquitous access should be the new norm. Fortunately, open standards (and open source) are largely driving this technological convergence. Good thing as these handhelds now pack the processing power of a full personal computer and users will expect their applications to work properly regardless of the device at the end of the bitstream.

It doesn’t matter that the display device is a smart phone or PDA, because the executive dashboard looks and behaves similarly to its desktop counterpart. Why? Because the application relies on open standard, server-side technologies that operate uniformly within a full-featured web browser. And, it shouldn’t matter where that browser is running because even mobile web browsers are supporting advanced web technologies, like AJAX and Flash. So, designing and delivering for ubiquitous access should be the new norm. Fortunately, open standards (and open source) are largely driving this technological convergence. Good thing as these handhelds now pack the processing power of a full personal computer and users will expect their applications to work properly regardless of the device at the end of the bitstream.Delivering ubiquitous application and data access for enterprise workers is just the first of four technical requirements of next-generation web applications. Next post, I’ll explore the second topic in my four-part series: “Elegant Presentation”.

Wednesday, June 3, 2009

A New Blog Series: Next-Generation Web Applications

I see Google Wave as another strident reason why harnessing the power of information, inside an enterprise, will begin to look more and more like the consumer-oriented web. The next-generation knowledge worker won’t struggle with the trade-offs that those of us who grew into computers and the Internet during the course of our careers have had to deal with. And, this transition will occur just in time – as the aging workforce in the world’s most developed economies retires at its fastest pace ever, only to be replaced by younger workers with consumer-like expectations for the way IT systems and applications should behave. You might recall that I’ve referred to this phenomenon in a past post as “The Consumerization of Information”.

In my next four posts, I’ll describe the major web-based application design points that help define the simplicity and usefulness of this next generation of web-based applications. So, to prepare any application project for a much-less bridled future, everyone should deeply understand:

I. Ubiquitous Access to both systems and data

II. Elegant Presentation that leverages the power of the latest web technologies

III. End-user Customization that enables personalized improvements, mash-ups and combinations that appeal to each individual

IV. Integrated Collaboration that helps connect people with information and ideas simply and intuitively.

I’ll use my next four posts to expand on each, in order. And, I’ll look forward to your comments and suggestions to make these four points more powerful and complete. I’ll be looking for ideas on Twitter (follow me at @BrianG_Jasper or @Jaspersoft), as well.

Friday, May 29, 2009

Inviting Community and Commerce

Community members provide the experience, time and attention, and passion – that help to make our products better with every release. Commercial customers provide us with fuel (revenue from subscription sales) that enables us to deliver the very best open source business intelligence products possible. While this is a simplification of the intricate roles being played by our community and commercial customers, one of these groups without the other results in a far less capable Jaspersoft. If Jaspersoft were a human body, the community would be our heart and the commercial customers would be our mind. Not a bad metaphor . . . as I transition into how we put that into action.

On the heels of the launch of our new community web site, the JasperForge, we even more recently debuted a new commercial web site: www.jaspersoft.com. While the technology employed in this new commercial site is every bit as impressive as our Forge, what I’m most pleased with is the emerging linkage between the two properties. More and more, our community is becoming our commercial customers. In turn, more and more, our commercial customers are genuinely part of the Jaspersoft community. It only makes sense to create a total web site experience that ensures all visitors (to either site) can get the most out of every click. While we believe it is important to maintain two sites with two different sets of objectives, we also know that inviting both community and commerce is good for the whole body of Jaspersoft. One example: the Jasper Shop landing page is always among the top five most frequently visited pages at Jaspersoft.com. And, the most common referring site to this Jasper Shop page? JasperForge.org.

If you’ve not visited these new sites yet, I invite you to do so. Hopefully you’ll find reasons to visit and participate in both. In the meantime, you can also follow all-things Jaspersoft by joining our FaceBook group and our Twitter feed. Enjoy.

Tuesday, May 12, 2009

JasperForge Drives New Levels of Collaboration

When we originally envisioned a new Forge platform that would enable the types of collaboration and networking required for the most advanced community development, this new Forge is what we had in mind. Our earlier JasperForge release, the first one built on the EssentiaESP platform, was launched last summer and we termed it a “production beta.” We did so for a variety of reasons, mostly to indicate that we had a large number of things with which we were not yet finished and that we were embarking on an important process that would result in what we see today.

Our new release is termed “full production” because it is equipped with all of the social networking, sharing and engagement features we envisioned last summer. Now we have a full-fledged community development platform that can enable new types of interaction, project-building and even elements of social networking that will simply help community members be more productive, more quickly.

Open source software communities don’t look like they did five short years ago. Since open source started being widely adopted, new types of community contributors have gotten involved in defining features and requirements. Software developers, business partners and resellers all play critical roles in Jaspersoft’s product development. And, because we host the world’s largest open source BI community, we believe it is our natural responsibility to take a leadership role in defining the next-generation of Forges, or community collaboration environments, to meet these evolving demographics.

If you’ve not yet experienced the new JasperForge, I encourage you to do so. You will find new features such as Google Gadgets, RSS support, and Wiki and blog improvements, among many other new features. As always, your comments, feedback, and ideas will directly feed our pipeline of improvements planned in the future. So, register and let us know what you think. Enjoy!

Brian Gentile

Chief Executive Officer

Jaspersoft

Monday, May 4, 2009

SpringSource + Hyperic = Advancement for Open Source

I’ve long-believed that mass and velocity matter when building a business. Mass comes from serving more customers, building a bigger community and ecosystem, and succeeding financially. All of the successes that both SpringSource and Hyperic have demonstrated during the past few years, when combined, will simply create a more mass-ful and influential open source software company that will further validate our shared methodology. Ultimately, adding credibility and maturity to open source software so that more enterprises and organizations will recognize its many advantages will be good for all of us.

So, I congratulate Rod Johnson (CEO, SpringSource) and Javier Soltero (CEO, Hyperic) and invite you to do so as well. We look forward to Jaspersoft’s continued strategic partnership with the combined company. You can learn more about this announcement at the SpringSource web site.

Thursday, April 30, 2009

SAP is Proving the Open Source Model

Like all aged, proprietary software mega-vendors, SAP’s software license growth has slowed during this past year and it is seeking to counter that downturn by raising maintenance fees to extract more revenue from existing customers. You can read a summary of SAP’s latest financial results here. Keep in mind that, historically, customer support and software maintenance fees have been particularly incendiary topics within the SAP user community.

To remedy SAP’s ever-increasing maintenance rates, the SAP User Group Executive Network (SUGEN) has been at the bargaining table with SAP officials, trying to negotiate a compromise. Forrester’s Ray Wang has reported on the outcome of this discussion with a solid summary. Bottom line: SUGEN has compelled SAP to measure and monitor its customer support progress through a series of key performance indicators (maybe they should use Jaspersoft to do so ☺?), indexing future maintenance increases based on the delivery of proven customer value. In any case, the SAP press release states: “Starting in 2010, the price of SAP Enterprise Support for existing customers will continue to increase based on individual contract terms but will not be higher than a yearly fixed upper cap. This translates to an increase average of no more than 3.1 percent per year from 2010 onwards. The price of SAP Enterprise Support will be capped at 22 percent through 2015.”

Well, this seems to be quite a bargain for SAP customers. They now have the negotiated privilege to pay SAP 22% (through 2015) for the mediocre support they’ve been complaining about for years. If this isn’t compelling evidence of the need for open source software subscription models, I don’t know what is. CIOs are learning, through trying circumstances, how to “right” the backward pricing and sales model that enterprise software companies have imposed on them for 30 years. I’ve written about this topic energetically in the past and would point out that open source software when combined with subscription-based pricing enables any organization to wade into a product and solution at its own pace, proving the value before or while it is being used (not many quarters or years afterward as with proprietary software and licensing models).

To all the SAP / Business Objects customers out there whose budgets won’t allow yet another maintenance increase, let’s talk. We can stand behind the “R” in ROI.

Brian Gentile

Chief Executive Officer

Jaspersoft

Thursday, April 23, 2009

Is Open Source BI Making a Difference?

It remains too early for confident speculation with regards to MySQL, even with the Oracle executive analyst call (announcing and discussing the acquisition of Sun) already complete. It was just last year at this Conference that similar questions were being asked about the acquisition of MySQL by Sun. What a difference a year makes.

So, I set my sights on answering a more practical question: is open source BI making a difference? My investigation and observations have yielded a more bullish “yes” than even I expected.

During the course of this week, I met with partners and customers who use both Jaspersoft and MySQL. They told me similar stories about solving new BI problems with our products . . . or solving old problems but in new ways and for new organizations that wouldn’t have had the financial or technical wherewithal to use a costly, complex, aged, proprietary BI product. Commonly, I was told about demonstrated cost savings that accrue to those organizations that are forging forward with open source BI solutions. The average order of savings is 80-90%. Pretty hard to ignore that kind of price/performance improvement in this day and time.

The Oracle acquisition news dominated our RSS readers this week – for good reason. It’s an important industry movement. But, it’s worth pointing folks to some new open source BI contributions that Jaspersoft announced this week.

* Bi3 is using Jaspersoft and Infobright to power its new on-demand Virtual Business Intelligence Competency Center. This strikes a new and sophisticated tone for cloud-based BI.

* Jaspersoft and Infobright have launched a joint project on JasperForge to encourage collaboration and adoption of both company’s community edition product lines. The goal: end-to-end BI and DW that can enable every organization to compete more effectively.

* Jaspersoft has updated its Jaspersoft Business Intelligence Suite for MySQL. The new Jasper for MySQL v3.5 is specifically certified and packaged for the MySQL database and is available through MySQL’s OEM channel.

My hat’s off to Sun and the MySQL team and its community for hosting a great event this week. And, my thanks to the Jaspersoft team and community for ensuring we continue to make a big difference in open source BI.

Monday, April 20, 2009

Sun & Oracle Deal: A Battle for the Developer

Make no mistake, Oracle’s acquisition of Sun is about one thing: the hearts and minds of the software development community. Oracle will now have control of the Java programming language and some of the most important development tools built on top of it. This places them at a level of influence which they’ve not yet wielded and allows them to compete far more strategically with SAP, IBM and Microsoft (Oracle’s true competitors). To prevent developers from fleeing to those competitors, Oracle will need a different and more transparent, collaborative approach than it has ever mustered in the past. This audience will demand it.

But, Oracle’s first order of business will be the rationalization of their new middleware and infrastructure stacks against what they already own. And, of course, the biggest task will be the key decisions taken with regard to the open source and proprietary products. Open source companies and developers need to watch Oracle’s moves in the coming weeks and months very closely. The old adage that with great power comes great responsibility is very relevant here. This will be Oracle’s top priority because it affects the biggest audience and could have a substantial impact on the open source movement in the near term.

Lastly, I believe any speculation on the fate of the much-loved MySQL database is, at this point, premature. While Oracle has plenty of database assets, none garner more appeal with the modern, web-based world than MySQL. Oracle execs will surely understand the most successful use-cases for MySQL and allow it to continue flourishing in those arenas. Where MySQL encroaches on the functionality of Oracle’s main database products, the outlook is murkier. The watch word is stay tuned.

Friday, April 10, 2009

Open Source BI Can Do Better

One of my most often-repeated phrases, inside of Jaspersoft, is “if all we do is replicate the capabilities of the aged, proprietary BI vendors of the past, we will create some value but not enough. Jaspersoft is about innovation and doing things much better, much differently. Our mission is to reach more organizations and individuals with our business intelligence tools than any company that has preceded us.” And, I mean it.

Which is why I am so proud of our Jaspersoft v3.5 release, announced just last week at the Solutions Linux event in Paris. I spoke with Sean Michael Kerner at InternetNews about this new product release and he captured some of the most important points. In summary, Jaspersoft v3.5 introduces three new and substantial elements of functionality that distinguish our product from all other business intelligence platforms:

1. Integrated Analysis - multi-dimensional capabilities delivered within a report or dashboard, without the need for an OLAP cube or data warehouse. If desired, the customer can choose to process query requests against an in-memory data set (within JasperServer). If the customer prefers greater performance, the query processing can be pushed down to the database. This choice is configured with a check box. Pretty cool.

2. SaaS-Enabling BI Platform Architecture - multi-tenancy is built in to v3.5. An OEM customer can now use our BI platform to embed BI functionality within their SaaS application. We already have about 50 commercial SaaS customers using previous versions of our product. We've learned a lot from them and delivered this formal, groundbreaking functionality within v3.5. Our plan to expand SaaS capabilities in future releases will continue to distinguish our platform and set the standard for BI platform functionality.

3. User and Data Scalability - we've completed a wide series of performance benchmarks with v3.5. This new platform can scale from 250 - 1000 concurrent users - all using a single, quad-core-configured JasperServer, depending on the type of tasks they want to accomplish. We know of no other BI platform that can deliver anywhere near this kind of price / performance. Period.

So, you can continue to expect Jaspersoft to deliver BI tools that solve new reporting and analysis problems for many needing organizations. And, you can continue to expect us to do so at the lowest possible cost. This is a win-win for our customers and an invitation to check out a demonstration of Jaspersoft v3.5.

Brian Gentile

Chief Executive Officer

Jaspersoft

Monday, March 23, 2009

CIOs Should Meet Open Source Half Way

The CIO panelists emphasized that open source commonly suffers from misperceptions that prevent serious consideration of the products. The solution they offered is for open source vendors to spend more time and money courting them, much like the aged, proprietary companies with whom open source vendors, now more than ever, compete. While I agree with (and appreciate) their feedback, I disagree with their proposed remedy. And, here’s why.

About one month earlier, I received a call from the CIO of a multi-billion dollar technology company who nicely summarized the predicament facing all IT organizations. While lamenting the 80% of his budget dedicated to maintaining legacy systems, he was struggling to find ways to reduce costs and increase innovation. To make matters worse, he just received his annual maintenance bill from his long-time (proprietary) BI mega-vendor and the amount increased from 20% to 22% of his original license price, adding meaningfully to his “80% problem.” Subsequently, he offered his summary:

“I’ve come to realize that the traditional software industry pricing and packaging model is fundamentally broken. I spend huge sums on license fees, services and support in advance of even knowing if the complicated software is going to provide the return I’ve been promised. I bear all the risk.”

The transparency, greater simplicity and enormous cost-savings of open source software solves his business problem. CIOs and open source vendors have a complementary relationship because IT team’s can wade into the technology at their own pace, learn about a product‘s capabilities and even put it into use, before any money is spent on license, services or support. In this sense, the return on the prospective use of the open source product is known before any investment occurs (not including the time and energy of the internal IT team) – which fundamentally rights the upside down model we’ve become accustomed to during the past 40 years.

So, I’ll assert that commercial open source vendors are the most closely aligned with the IT organization and it’s time for CIOs to take proactive steps to learn more about open source alternatives. This is where I disagree with the suggestion of the CIO panel at the Think Tank event. If open source software companies ramp up sales and marketing to behave like the dated, proprietary vendors, the significantly higher costs would reduce some of the advantage we pass along to customers. Instead, I urge CIOs and IT staffers to make it their job to learn more about open source software and reach out to those vendors who are best aligned to help them reduce their 80% maintenance cost problem and finally get back to innovating, much like the CIO that contacted me.

Brian Gentile

Chief Executive Officer

Jaspersoft

Monday, March 9, 2009

First U.S. Government CIO Offers Hope through Efficiency and Transparency

"’It's tough in tight budgets to find the innovative path,’ Kundra notes, which is why he was so focused on gaining stock-market-like efficiencies in weeding out wasteful projects and identifying strong ones. Thanks to the savings already established from this approach, he was able to set up an R&D lab to test new ideas.”

Mr. Kundra has an historic opportunity to help set the agenda not only for Information Technology within the U.S. government, but throughout the public sector and even in private enterprise. As he constructs his game plan in this new role, I’ll offer Mr. Kundra three ideas to literally transform our government’s approach to IT:

1. Tackle the “80% maintenance cost” problem head-on by creating the most efficient IT infrastructure in the world. Do this by using the array of proven technologies that can measurably eliminate costs, especially cloud-based computing services and open source software. Avoiding the status quo (aged, proprietary software and hardware models) will help free up funding for the next two points.

2. Drive innovation by making the most vital applications more accessible and available through an increasingly consumer-like user experience. [Note: Kundra has specific experience here, impressively]. This could encourage a whole new generation of IT personnel who will want to work for the government.

3. Support your President’s agenda by using information technology to re-create the government of the people, by the people and for the people. Social services, contracts and procurement, and public safety are all great places to start. Most interestingly, the entire process of creating law can be made transparent and accessible through wiki’s, blogs, forums, and the collaborative construct of a Government “Forge”. Call it “OneWorldForge” and use it to re-establish the United States as the upstart democracy that once again changes the world.

I offer my congratulations to Vivek Kundra and wish him much success in this important new role.

Brian Gentile

Chief Executive Officer

Jaspersoft

Monday, March 2, 2009

Building on the Success of Open Core, guest post by Matt Aslett

Building on the success of Open Core

Thanks to Brian Gentile for the chance to guest post on the debate surrounding open source business models. Brian recently joined that debate, writing that the Open Core model offers the best model for community and commercial success.

I absolutely agree that Open Core is a successful strategy. In the report, Open Source is Not a Business Model, we noted that it is now the most popular commercial open source licensing strategy, used by 23.7% of the 114 vendors we assessed and 40.4% of those that were using a commercial licensing strategy to generate revenue from open source.

However, I also recently questioned whether the Open Core model is sustainable in the long-term (I'm thinking 5-10 years).

This is partially based on my belief that we will see a shift away from vendor dominated open source projects (such as MySQL and JasperSoft) to vendor-dominated open source communities (such as Eclipse and Symbian) during this period, but also because there are other potential problems that arise with the Open Core model.

Rather than re-hash those issues here I thought it would be better to use this guest-post opportunity to suggest some strategies that could be used to ensure a sustainable implementation of the Open Core model.

Many of these are being implemented by the various Open Core vendors - including JasperSoft - and with that in mind - along with the final caveat that unlike Brian I am the chicken in this debate (involved but not committed) - these are the issues that I think are critical to a successful Open-Core model:

Truth in advertising

Be absolutely transparent about licensing. Potential customers don't like feeling confused or misled and it is vital that the marketing makes it clear that the community version is open source and the enterprise edition is not. Personally, I believe that Open Core vendors could and should be referred to as "open source vendors" but that is something of a hypothetical construct. What is clear is that if the product in question does not use an OSI-approved license it is not open source, and should not be referred to as open source.

Don't promise what you can't deliver

On a related note, open core vendors must avoid overselling the benefits of open source. Be an advocate for open source, but never forget that the revenue generating products and features are not open source. Tarus Balog of the OpenNMS Group recently highlighted the theoretical contradiction at the heart of the Open-Core model in the context of the request from open source vendors, including some open core advocates, for open source to be included in President Obama's economic stimulus plans. If you ask the government to mandate open source, don't be surprised if it insists on the source code to your proprietary functionality. Similarly in our Cost Conscious report, we found that 73% of end users surveyed expected to generate cost savings from deploying open source software through reducing the cost of software licenses. Clearly some of them are going to be disappointed if they need to be deploying software licenses to get the functionality they require.

The new UK government policy on open source software also makes the distinction between the treatment of open source licensed products and the exit, rebid and rebuild costs associated with proprietary software. Yes, there are licensing mechanisms that can be used to ensure Open-Core products can be used as part of pro-open source adoption policies, but Open-Core vendors must make sure they are not selling customers a vision of open source while delivering the proprietary licenses they are supposedly trying to avoid.

Separation of church and state

The further removed the proprietary functionality is from the needs of community users the less likely it is that the community will develop their own alternatives and the easier it will be to manage the needs of both commercial and community users. If the vendor is hearing constant demands from the community for features in the enterprise version then the balance is wrong. It might sound like a great opportunity for up-sell but if the community users are never going to pay for the enterprise product then it just creates confusion and antagonism. When deciding on proprietary features vendors should create a list of features that is only ever going to solve the problems faced by enterprise customers. In the context of the law of Conservation of Attractive Profits, articulated by Clayton Christensen in The Innovator's Solution, it also makes sense for a vendor to focus its attention further up the value chain than the stage it is actively commoditizing with the open source community version.

Care in the community

Brian is right to say that "Community and Commercial customers form a necessary and symbiotic virtuous circle" and that "done properly, the resulting broad use yields benefit and value to both the Community and Commercial customers." Doing it properly means looking after and understanding the community. Among other things a vendor needs to:

- Hire a proven, experienced and respected community manager to liaise between the company and the community

- Create a forge - if you don't know who your community is and what they are doing with the software you cannot hope to understand why an individual or company is part of the community and respond to their wants and needs.

- Encourage partners to become part of the community, rather than part of commercial partnerships, to help improve the core code base and enable new commercial strategies.

Defining and creating understanding about licensing and revenue strategies is important but philosophical debates between open source vendors can appear from the outside like a fight between two bald men over a comb (and yes I am totally aware that I am guilty of propagating the current debate, I fully intend this will be my last word on the subject of Open Core for a while). Michael Tiemann recently claimed that the world wastes $1 trillion per year in "dead-loss writeoffs of failed proprietary software implementations" and maintained that open source software is the way to solve that problem. He also stated that "semi-proprietary strategies... are all too clever by half, because they all imagine winning the next battle in a war that's already been lost." Of course he might not be right, but $1 trillion makes it worth thinking about.

I realize that for many Open Core vendors I am preaching to the converted. Many of the same themes were discussed on a panel on open source at the recent Accel Symposium at Stanford, for example.

Open Core is definitely the most popular and successful model of commercial open source in use today, and I expect it to remain so for the next few years, but the conclusion of Sun's Zack Urlocker on that Accel open source panel is worth bearing in mind:

"There are many different ways to skin this cat. There isn't one single model for commercializing open source, and things will continue to evolve as the market expands. What worked in the past may not always apply in the future."

Matt Aslett

Analyst, enterprise software, The 451 Group

Blog

Wednesday, February 25, 2009

Open Core Model Offers Best Opportunity for Community and Commercial Success

“Open Core” was originally offered by Jaspersoft’s Andrew Lampitt as a new term to define the commercial open source software model that relies on a core, freely available (e.g., GPL) product architecture that is built openly with a community, all the copyrights for which are owned by the sponsoring vendor, and which includes premium features on top, wrapped entirely with a commercial license. T he 451’s Matt Aslett quickly used Andrew’s open core definition and relies on it within his most recent reports and articles. I would enhance Andrew’s definition by adding Jaspersoft’s current open core ideals: the core product is extended with commercially licensable add-ons (and those contributions come from Jaspersoft or its community), each extension comes with visible source code, community collaboration is encouraged (including feature “voting”) and we, as hosts of the community, actively monitor its health and vibrancy to ensure constant progress.

Roberto Galoppini properly infuses a heavy dose of being “community-driven” as key to any open source model. I agree and will expand below. Mat t Aslett recently re-stated his distinction between open core (he calls it “hybrid” in this recent post) and the embedded open source model. Matt believes the embedded model possesses long-term advantages and lower risks, which I think depends mostly on which open source product / project is embedded. And, a good bit of debate has ensued that seeks to label companies using the open core model as not substantially different than proprietary software companies. I believe this is nonsense as the distinctions between the open core software companies with which I’m familiar and traditional software companies are stark. To explain, I’ll cite my three primary reasons the open core approach provides the best opportunities for community and commercial success.

1. Community involvement in the core code is encouraged, while community “extensions” are not only plausible, but probable. The product “core” is built collaboratively with the Community and a non-restrictive license (e.g., GPL) ensures a variety of appropriate uses. A successful open core model should deliver a core (free) code base that is both substantial in its capabilities and successful in its Community.

2. Commercially-available extensions, a superset of the core and ideally with visible source code access, are provided, thus creating the value and assurance commercial customers often require to sign a commercial license. These extensions are made far more valuable because of the community-focused core code base acting as the underlying foundation.

3. The Community needs a healthy and growing group of Commercial customers to both legitimize the open source code base and ensure necessary financial success so the on-going advancement of the products / projects are assured. And, Commercial customers need the Community for the breadth of ideas and energy they represent, which helps the complete code base advance more rapidly and with higher quality than it ever could otherwise. Thus, the Community and Commercial customers form a necessary and symbiotic virtuous circle.

In this debate, some have claimed the only true and legitimate open source model is to provide identical community and commercial editions of the source code. The argument is that relying exclusively on services and support revenue will sufficiently sustain and that creating commercial extensions renders an open source company no different than a proprietary software company. Minimally, this argument misses a valuable history lesson. Most major software categories where open source has positively disrupted have required successful commercial open source companies to eventually use a model similar to open core, in order to continue growing. Think JBoss, Linux (Red Hat Enterprise Linux, Novell/openSUSE), SugarCRM, Hyperic, Talend, and of course, Jaspersoft. Done properly, the resulting broad use yields benefit and value to both the Community and Commercial customers. Accordingly, we at Jaspersoft take our community responsibilities as seriously as any commercial contract.

The pure open source model will continue to democratize software development and yield some commercial success. But to truly disrupt software categories where proprietary vendors dominate (and to deliver large new leaps in customer value), the open core model currently stands alone in its opportunity to deliver community progress and commercial success.

Brian Gentile

Chief Executive Officer

Jaspersoft

Friday, February 13, 2009

U.S. Government Should Consider Open Source

Led by the Collaborative Software Initiative, an open letter to the new Commander-in-Chief went live on Tuesday, February 10. I signed this letter, along with a wide number of fellow open source CEOs and executives, including colleagues at Alfresco, Ingres, Hyperic, Talend, Compiere, MuleSource, OpenLogic, Unisys, and just recently, Red Hat. My hat’s off to Stuart Cohen and David Christiansen at the Collaborative Software Initiative for spearheading this dialog with Mr. Obama. The tenets of the letter are well aligned with the advice I offered our next president in my early November blog post.

Because I encourage far more efficient use of my tax dollars, I want our Government to consider open source software products in every category they are available. I agree with many of those who have engaged in a dialog about this letter since its posting, especially Mr. Christiansen when he said: “I don't want to mandate everything the government does should be open source.. I think software should stand on its own merits, but I honestly believe that one of its merits should be the sourcing of the software, the way it's built and who owns the technology."

I’m proud of the traction Jaspersoft has made in helping government customers spend less and get more. Here’s to hoping this open letter is heartily considered, in the Oval Office and throughout our nation’s government.

Brian Gentile

Chief Executive Officer

Jaspersoft

Monday, February 2, 2009

2009 BI Market Prediction #4: Consumerization of Information

The facts are clear: the evolving workforce and its expectations for software, which will drastically transform software development and usage, especially in the enterprise software market, is underway. The consumerization of information is based on a very real workforce demographic shift that becomes even more pronounced starting in 2009. As the aging workforce in the largest economies continues to retire (in the U.S., it’s the baby boomer generation) and more young workers enter and climb higher, we’ll see a widening gap (I call it the “expectation gap”) between the expected behavior of enterprise applications and their actual behavior.

Younger workers have grown up with computers and, by and large, the Internet. They’ve never NOT been connected. Therefore, their expectations for how software systems should behave are vastly different from an older worker who has grown into computers and software during the course of his career. My favorite example is a personal one. One evening, while helping my 14-year old daughter complete her science homework, which meant graphing her results data, printing, and pasting on to a poster, I experienced first-hand this prediction. She and I were both frustrated trying to label an Excel chart properly. Rather than persevere, my daughter went to Google, searched for “free graphs,” brought up a very simple web page (web service) that allowed her to enter her data and click “Graph It!” It produced a perfect pie chart, which she copied, pasted and printed. She was done in seconds.

Therefore, those software vendors that design products that work according to the new web principles will fare well with this younger generation of workers. Those software vendors that do not will become less relevant. While the effects of the consumerization of information will have a big impact on BI tools in 2009, they’ll compound each year thereafter – as more baby boomers retire and more Gen X and Gen Y workers replace them. And, although my example here focuses on the United States, this same demographic phenomenon will play out similarly in most of the major economies over the course of the next decade.

I’m often asked to predict which software vendors will gain advantage as a result of the consumerization of information and which will fall aside. So, I’ll turn this question to you . . . who do you believe stands to gain and lose as the workforce landscape shifts?

Brian Gentile

Chief Executive Officer

Jaspersoft

Monday, January 26, 2009

2009 BI Market Prediction #3: The Besieged CIO

My premise for prediction number three is that the Besieged CIO will come under increasing strategy and cost pressure - and continue to look for capable, low cost alternatives wherever they might exist. I call him or her the besieged CIO because the pressure to continue doing more with less, in this top IT role, never ceases. The CEO is applying constant pressure to drive the company to innovate and create top-line growth. The CIO’s budget, however, isn’t growing proportionately and it is a constant challenge to maintain existing IT systems with what amounts to fewer funds so that more resources can be directed to new technology initiatives (to fuel the CEO’s agenda).

So, what’s a CIO to do? Answer: find capable, lower-cost alternatives in all technology categories. This is where I believe new development and delivery models will become even more popular, which benefits open source and software-as-a-service vendors because, in most cases, the up-front and on-going costs are just lower (especially in the case of open source). This phenomenon will continue to expand the high-growth space of virtualization as well as the latest hype area: cloud computing.

Supporting my prediction, very recent survey work by analyst firm Gartner proves that this will be a tough year for CIOs. Among other findings, Gartner’s research shows expected flat IT budgets, the need to restructure to meet the difficult economic conditions, and an on-going #1 technology priority placed on business intelligence. Gartner states that CIOs expect to “invest in business intelligence applications and information consolidation in order to raise enterprise visibility and transparency, particularly around sales and operational performance. These investments are expected to pay extra dividends by responding to new regulatory and financial reporting requirements.”

Lower cost and simpler-to-use BI solutions that enable widespread use of dashboards, interactive reports, and deeper analytics will be the tonic and salve that enable the besieged CIO to make it through these tough times. I’m proud to know that Jaspersoft will be doing its part by delivering the world’s best (and lowest cost) business intelligence products and services.

Brian Gentile

Chief Executive Officer

Jaspersoft

Sunday, January 18, 2009

2009 BI Marketplace Prediction #2: Technology Shifts

The second and related prediction is that key Technology Shifts will be another disadvantage for these proprietary BI behemoths. In the last year, we’ve seen some important new technologies emerge and begin to really influence BI – and I believe these will have an even more significant effect in the coming year. Some examples of these technology shifts include columnar-oriented data warehouse tools, query optimizers, SOA/web services (and overall componentized design), mash-ups, in-memory analytics, integrated search, and the use of rich media services to provide more compelling (web-based) user experiences. For BI tools and software, the question is “which vendors will be able to deliver a more modern, dynamic experience using these new technologies?” And, “How will new customer needs be addressed by harnessing some of these new technologies?” The more dated a product and its architecture, of course, the more difficult it will be for the vendor to deliver new functionality that truly takes advantage of these new technologies and solves new problems for a customer.

Just this month, Gartner published its own list of BI predictions for 2009. Second on its list of five predictions is more distributed (across enterprise business units) funding for BI software and solutions. The problem here, Gartner wrote, is that "business users have lost confidence in the ability of [IT] to deliver the information they need to make decisions." Exactly. And, this lower confidence is made worse by business users seeing simple but compelling BI functionality in new tools that are not yet on the IT standards list. Let’s make 2009 the year that easy-to-use and compelling BI functionality is actually used broadly in an enterprise.

Brian Gentile

Chief Executive Officer

Jaspersoft